What is the future of data centers? Do any of the current global crises change your thinking about your next IT installation? And as mundane as it may seem, how can IT enclosure design be updated properly and efficiently? I mean it’s just a big metal box.

My goal with this article is not to raise unanswerable questions and leave you hanging. Yet, given what we’ve lived through the past few years, these are some tough ones!

So, I will answer the final question first.

Dimensions do not change – we still have to deal with height, width and depth … and number of rack units. We have to move the heat out, find room for all of those cables, figure out how many will be needed and, of course, someone will STILL bring up the budget.

Updating current IT enclosure design is not about changing core metrics; it is about the specialization within them. Sound interesting? Read on!

This is the “New Normal.” Get Used to It.

Looking forward toward the future requires a brief look back, and here are some quick thoughts.

How far into a global pandemic do we need to get before we consider our situation to be the “new normal.” How about 29 months? As of this writing, that is where we are in this huge social and economic re-engineering event, and changes are ongoing.

The “great resignation” morphed into the “great reshuffling,” and now, perhaps, we’re in the “great regret.” That all sounds great, but what have we really learned as IT professionals over the past 2+ years? What has the industry learned? What is new for you? How has business changed?

Managing Issues has Gone from Important to Critical

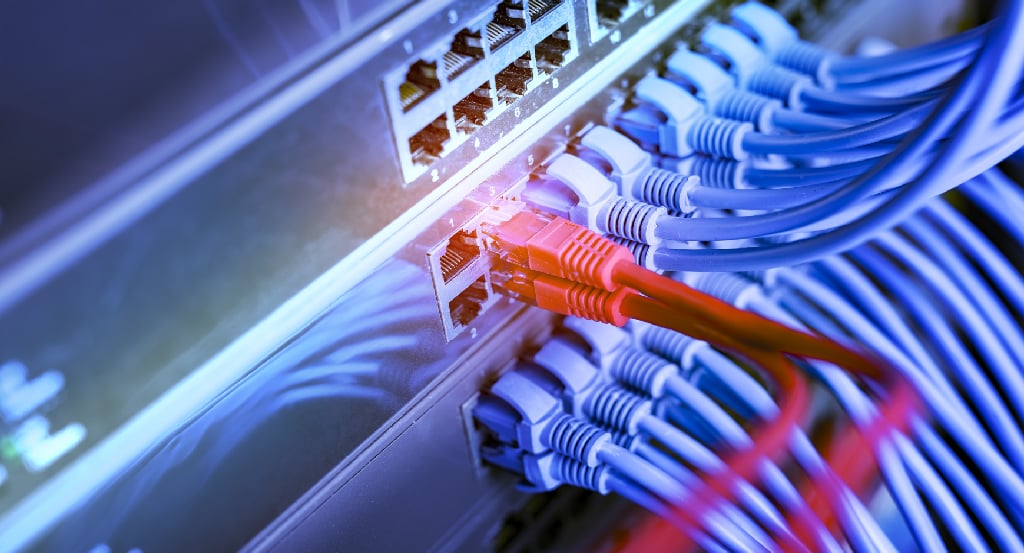

Whatever your answers and your learnings, the ability to effectively manage IT has grown more and more important. Consider all of the things an IT professional needs to manage: site selection, installation space (including constrained space), enclosure dimensions, component deployment, cabling, thermal (air flow), and so many more.

Being able to effectively manage issues leads to the creation of holistic, long-term solutions, including the ability to also properly update enclosure design for the next IT deployment.

The above list of management skills can spark future data center planning, but looking back is an important way to plan for what is to come. What has NOT happened that we thought would? What knowledge can we take away from failed predictions?

What Predictions Haven’t Come to Fruition (Yet)?

Anyone can predict anything. The internet is wonderful for that, isn’t it? I do not make predictions, but I definitely look ahead. Pre-pandemic, I had a feeling of where thermal management of IT enclosures would go; toward universal use of high-density cooling solutions. Was I right?

Yes and no. The heat loads within IT cabinets have continued to increase, although installation densities did not grow as fast as predicted, which reminds me of Moore’s Law.

Heresy Warning: Moore’s Law is not happening (in the most strict of definitions) — as the number of transistors in a microchip increases, the speed/capability of computers will double every two years — yet, there are exponential improvements at a slower pace. That can be applied to heat loads rising but not yet making the need for liquid cooling mandatory.

Virtualization (consolidating servers into a single hardware platform) hasn’t exactly led to minimization and a reduction of overall installation space, as predicted. More space than ever is needed (Ashburn, Virginia, anyone?) and that space is being filled quite well.

Here is another that approaches the prediction. As individuals, we were practically promised our own web servers with our own domains, having to use IT cabinets inside collocation sites. In reality, we have our social media accounts, our email addresses, and our Google accounts which, collectively, are close to our own websites.

Many predictions, therefore, have not come to fruition, at least not in the ways we thought they might. However, most predictions still have an impact on the future of data centers.

The Core Metrics are the Same

The fundamentals have not changed: core dimensions, configuration, competencies, and capabilities … they all remain the same.

For instance, footprint dimensions — 24-inch wide and 48-inch deep — have not changed, but is there a need for specialization? Perhaps in height?

Pushing rack units (typically 42U, 48RU, etc.) beyond 50-55RU may be possible, but is it practical? Lifting a 300-pound server device into a rack that high is no simple task. Or worse, trying to get it down.

So, while core parameters remain the same, updating is still possible and often needed for successful deployments. For instance, is high-density cooling an appropriate update for you at this time? Do next generation appliances — rack mount servers, storage, new chip technology, etc. — imply new requirements?

These levels of specialization must be considered.

“But, We’ve Always Done It That Way.”

I have heard this more often from customers than I care to admit: “We need a 45 rack unit (RU) tall cabinet because we have always used a 45 rack unit tall cabinet.”

Really? Why? Is that how the important decision of selecting IT enclosures is going to go? Modern demands are driving different solutions, and those in charge of choosing need to see the bigger picture.

Working with our 45RU example: Not all IT cabinet vendors supply the same product. The doors are different, the walls are different, the locks are different. Even if all are 45U, they are different in height. Maybe even slightly different shades of white, gray or black. Yes, they are all 45RU, but that’s about it.

“Those who cannot remember the past are condemned to repeat it.” Thank you George Santayana (wow – Santayana in the data center) for inspiring Winston Churchill and numerous others. Not every deployment in a data center was perfect before, so simply repeating that poor choice of IT cabinets is an easily preventable decision. In other words, doing something just because “we’ve always done it that way” really closes the door to the next IT deployment.

Your IT Cabinets Need to Solve More Challenges Than You Think

As many predictions as there were pre-pandemic, there is one change that few saw coming: the Edge. Once upon a time, data centers alone were thought to be the answer, but now it is necessary to put processing power close to the point at which data is generated, at the Edge.

Edge deployments, anywhere that is not a data center, can be surprisingly complex and loaded with challenges. Striving to get the most flexibility, cost-efficiency, and speed from Edge computing solutions has taught us lessons that are applicable to data centers.

The biggest lesson is that IT cabinets need to do more than hold components. Each could be configured with:

- High-density cooling system — Preventing equipment from overheating minimizes the likelihood of failure; for increased heat removal capacities, look to closed-loop systems such as Rittal’s LCP Systems

- Security system — Protecting significant investments means your last line of defense, the IT enclosure, must be ready for all threats

- Power distribution unit — Getting reliable network power to multiple devices from an uninterruptible power supply (UPS) system keeps a facility running

- Redundancy system — Failing to be up and running could cost an organization thousands of dollars every minute; good news: it is preventable

- Fire suppression system — Being ready for the worst is always a smart idea

A pre-engineered platform design, or bundle, comes fully packaged, with all components arriving together. No fabricating and modifying to fit the space.

Of course, there are do’s and don’ts for every deployment type, which brings us to finding industry guidance; a true partner to help you. How do you know if your vendor of choice today will even be there for your next IT deployment?

This is just the start of the conversation. Let’s talk about how tall, how wide, how deep? How can the dimensions AND internal volume usage be maximized? Everybody talks about height, width and depth – I talk about the internal volume that can be used. How many devices can be installed in any given footprint vs. how many could be installed. How about power? How much electricity needs to be provided vs. how much is actually available. And, of course, when IT appliances are plugged in, they get hot. How will that heat be removed?

And as stated above – someone always brings up the budget. Keep costs in perspective – consider how much money all of those IT devices represent when even a 1RU “pizza box” server (from any vendor) can be $2,000-$3,000 … EACH. Multiply that by 40 in just one footprint. Let’s not be “penny wise and pound foolish.”

Rittal is a proven provider with experts that work closely with IT professionals to understand unique needs and make recommendations that help future-proof a data center.

Looking for more future-focused information? This eBook fully explains Edge computing’s benefits for 2022 and beyond. Want to connect immediately? Simply call Rittal at 847-240-4630.